Funnel Analytics Reuse

Funnel Analytics Reuse

It's hard to re-use SQL. Let's count the ways:

Scenario 1

You have a funnel dashboard that goes to the CEO every day. One monday, it doesn't update. You grab some coffee, check chat, and trace the issue to an upstream table that hasn't update.

You ping the oncall. They reply - "oh, that table? It's deprecated."

They give you a new table to reference. The columns are different; it's a little less aggregated, and the split off some info into some other tables.

How hard is it for you to get the dashboard running?

Scenario 2

You've built a nice report in SQL. It aggregates some datapoints, builds a cache, and you can build a PDF off it. You get asked to adapt it to a different part of the business.

They use a totally different data model.

How much SQL can you reuse?

The Problem

In both cases, the answer is often that it's a lot of work, and you have to change most of your code. You can be inspired by your current query, but direct reuse is hard.

Why is that?

There's a few things that contribute:

- Operations - joins, selects, etc - are expressed relative to tables. If tables change, the entire structure of queries changes.

- Changes to the 'grain', or primary keys of table, can introduce large cascading refactoring needs

Potential Solutions

A lot of people have worked on smart solutions to this problem. They tend to gravitate towards removing SQL from the equation entirely, relying on virtualization/templating, or building on views that can be transparently updated to reduce coupling.

These all have their pros and cons:

- Dropping SQL prevents you from using the best language for expressing many data operations

- Virtualization/templating adds in extra complexity in the development process, that is recurred when refactoring is required

- Views can have performance issues, and tend to have issues managing evolution

Trilogy's Approach

Trilogy makes this easier by separating business logic from any materialized assets in the warehouse. As an example, let's think through a funnel analysis.

What's a funnel?

PopSQL has a good writeup here.

Being inspired by that, we'll start with a 4 stage funnel. We'll create an integer label for the steps - easy to sort, join, performant - and a text label.

key step int; # the integer ID of the funnel step

property step.name <- CASE WHEN step = 1 then 'Visit'

WHEN step=2 then 'Sign up'

WHEN step=3 then 'Activate'

WHEN step=4 then 'Purchase'

END; # the name of the funnel step

Now, what would a typical funnel report look like ? We want something like this

| Step | Count | lag | drop_off |

|----------|-------|-----|----------|

| Visit | 593 | | |

| Sign Up | 142 | 593 | 0.76 |

| Activate | 127 | 142 | 0.11 |

| Purchase | 6 | 127 | 0.95 |

That's simple enough to express off our query. Note that the annoying shenanigans to get a nice percent format. We'll talk later about how to simplify that.

WITH funnel_report as

SELECT

--step,

name,

count(customer_id) -> customer_count,

round(1 - customer_count ::float/

lag 1 customer_count order by step asc,

2) -> drop_off

ORDER BY

step asc

;

And that's it! We're done!

Wait a second... where's our data?

Up until this point, we've been able to work entirely at the logical layer; if this code compiles, type comparisons check out.

But to pull numbers, we need to hook ourselves up to a database. Let's plug some in. Using the great PopSQL example, we have an event table:

key time datetime;

key event_name string;

key user_id int;

key distinct_id int;

# raw data

datasource events (

time:time,

name:event_name,

distinct_id: distinct_id)

address events;

How can we use this to run our funnel analysis?

Per the description, this data isn't pure clean funnel data, so we'll ensure each ID hit the funnel pages in the order we want.

Let's write a quick query to do the transformation we need.

with funnel_inputs as

SELECT

CASE

WHEN event_name = 'View Landing Page' then 1

WHEN event_name = 'Sign Up' then 2

WHEN event_name = 'New Canvas' then 3

WHEN event_name = 'Start Subscription' then 4

END->funnel,

lag 1 funnel over distinct_id order by funnel asc -> prior_step,

distinct_id

having

funnel = 1 or prior_step = funnel-1

;

Now we have funnel inputs in the format we need.

Let's merge it into our existing model

# use our existing funnel analysis

merge funnel_inputs.funnel into step;

merge funnel_inputs.distinct_id into customer_id;

And run our query!

SELECT

funnel_report.step,

funnel_report.name,

funnel_report.customer_count,

funnel_report.drop_off

ORDER BY

funnel_report.step asc

;

If we need to move this funnel report to another source, we only need to recreate the intermediate mapping logic - the 'funnel_inputs'. The funnel report code will be unchanged.

Let's try it out. We can use some sample Bigquery GA data. It's in event format, so the inputs even look quite similar.

Perhaps we can reuse our event model down the road?

key time datetime;

key event_name string;

key user_id int;

key distinct_id int;

# ga example data

datasource events (

RAW('''cast(TIMESTAMP_MICROS(event_timestamp) as datetime)'''):time,

event_name:event_name,

user_pseudo_id: distinct_id)

address `bigquery-public-data.ga4_obfuscated_sample_ecommerce.events_20210131`;

We'll preprocess that to our input format. We'll pick the events that most closely match our desired funnel.

with funnel_inputs as

SELECT

CASE

WHEN event_name = 'first_visit' then 1

WHEN event_name = 'add_to_cart' then 2

WHEN event_name = 'begin_checkout' then 3

WHEN event_name = 'purchase' then 4

END->funnel,

lag 1 funnel over distinct_id order by funnel asc -> prior_step,

distinct_id

having

funnel = 1 or prior_step = funnel-1

;

And now, we can run our same query again:

SELECT

funnel_report.step,

funnel_report.name,

funnel_report.customer_count,

funnel_report.drop_off

ORDER BY

funnel_report.step asc

;

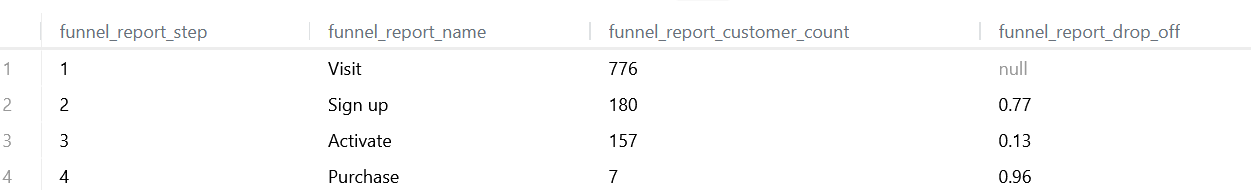

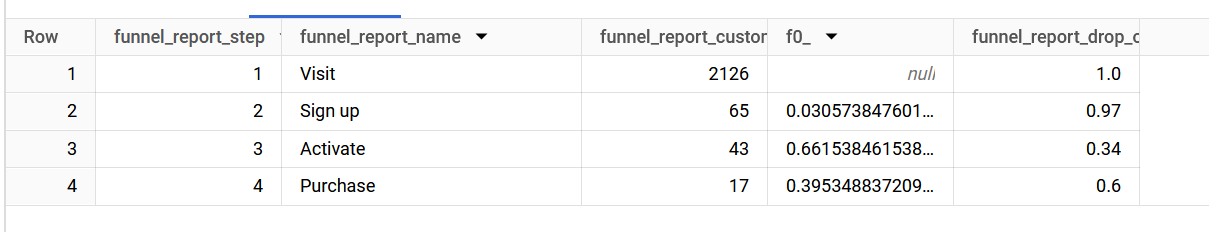

Voila:

That's a lot steeper dropoff! Maybe there's a fun comparative analysis to be done here - we can add a new funnel analysis to break down customers by more attributes, perhaps.

We'll leave that for another blogpost, though.

Recap

In this post, we've covered how to build a reusable analysis template - without any data at all - and then bind it to various sources via an interface layer.

In practice, you'd model this with a funnel.preql file, and then a bq_ecommerce.preql and popsql_ecommerce.preql file.

What other benefits does this bring?

- Ease of refactoring - you can update your funnel logic in one place and keep it consistent.

- Separation of concerns - your funnel logic can be understood abstract from any data source.

- Testability - want to run automated testing on your funnel? Iterate quickly on the query? Hook it up to a synthetic test table in DuckDB.