AI And Trilogy

AI And Trilogy

Trilogy makes your LLMs better. The short reason for this is because LLMs require context as oyxgen - they need it to achieve any results - but truly impress with flexible tooling with fast feedback loops.

So we've got two core takes - you need a semantic layer. Not because it's trendy, but because richer typing and modeling actually makes you more productive - a lesson we've learned from decades of experience in other programming languages. It's really hard to give an LLM the right context to make a perfect query; you need FK/PK constraints, nullability information, cardinality/grain info, details on what the values in a column mean, and more.

And your semantic layer can't be YAML. It needs to be a first-class language, with functions, logic, and reuse. Forcing humans to context switch to do different parts of their core job to be done will inevitably fail; and since the semantic layer has never been the 'main thing', it's always the thing that is dropped.

Why Now

Text to SQL has been a fairy tale for some time. Modern LLMs actually make it possible, because they excel at transforming unstructured inputs - a business user saying 'give me this thing' - into structured outputs. It's actually feasible now to build a 'clarify, contextualize, and generate' loop similar to what an analysit would need to do.

And progress has been fast - two years ago, we needed to build a railroad for LLMs to walk them down a specific bath to build syntactic Trilogy. This worked well, but had increasing costs as we aspired to have them answer more and more complex queries.

Today, we can fix up some compiler messages, give them a few examples, and let an agent go wild. And it's safe! They need no DB access to explore to build queries with Trilogy - just a semantic model that gives them the context they need, and an expressive language to run with.

We've spent collective years wrestling with chatbots, NLTK, tokenizing, and semantic parsing, to get middling results - the world we're in today is magic.

What You Get

We want Trilogy to be an LLM-native - though optional - language. We want to build the language to deliver the following:

- High precision. Accuracy is non-negotiable.

- No special syntax - humans need to review logic and extend it. Use the same language for everything.

- Performant - magic is more magic when it's fast.

- One-shot when possible - iterative cycles shouldn't be a requirement to get a good answer

Installation

The core trilogy SDK comes with AI helpers. Install with the ai flag to get dependencies. (just httpx) You'll also need an API key to your favorite provider - OpenAI, Anthropic, or Google.

pip install pytrilogy[ai]

Tips

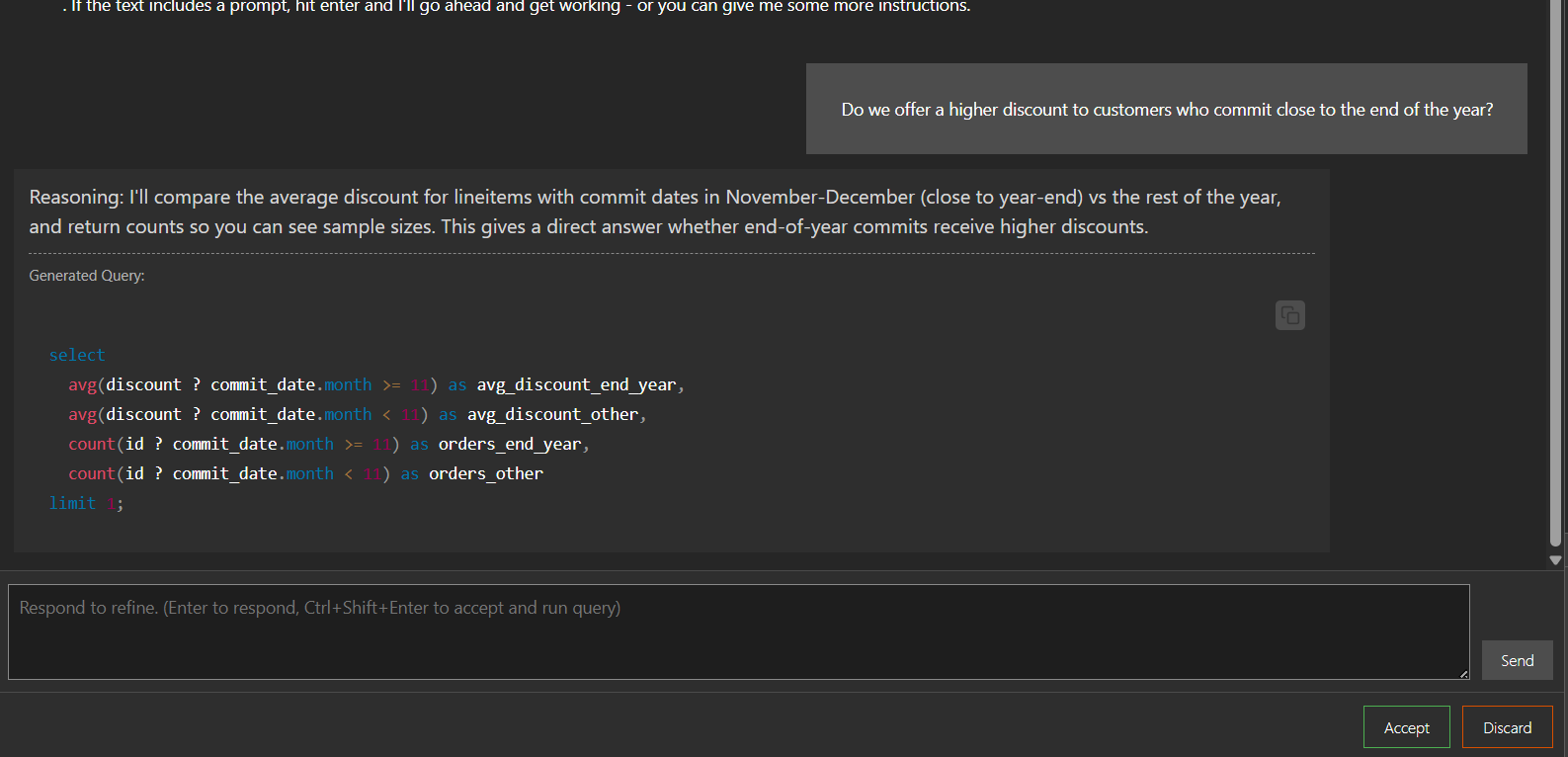

You can also just wrap normal trilogy query generation in an AI loop of your choice! Trilogy Studio does this on the frontend, for example - the 'here's a model, write a query, syntax check it, and refine until errors are gone' is straightforward to implement.

Tips

The trilogy-studio-core repo also contains a MCP server example

Quickstart

from trilogy import Environment, Dialects

from trilogy.ai import Provider, text_to_query

import os

executor = Dialects.DUCK_DB.default_executor(

environment=Environment(working_path=Path(__file__).parent)

)

api_key = os.environ.get(OPENAI_API_KEY)

if not api_key:

raise ValueError("OPENAI_API_KEY required for gpt generation")

# load a model

executor.parse_file("flight.preql")

# create tables in the DB if needed

executor.execute_file("setup.sql")

# generate a query

query = text_to_query(

executor.environment,

"number of flights by month in 2005",

Provider.OPENAI,

"gpt-5-chat-latest",

api_key,

)

# print the generated trilogy query

print(query)

# run it

results = executor.execute_text(query)[-1].fetchall()

assert len(results) == 12

for row in results:

# all monthly flights are between 5000 and 7000

assert row[1] > 5000 and row[1] < 7000, row

Details

It's really basic - we give the AI the language syntax rules, some examples, and the concepts from the current model. Then we ask it to generate a query, check syntax, and repeat the loop until it gets a valid query or runs out of tries. No database required; no big exploratory loops.

Tips

AI messing up? Update your model! Pre-define helpful metrics; add comments; improve typing and naming. It'll help humans too!

Examples

California Sales

Tips

This public instance might take a minute.